Publishing and sourcing linked data: where do you start?

- Tech Blog

The newspapers from News of the Great War are enriched with linked data since the end of 2018. Because of this, the data is machine-readable and easy to read in and process automatically – let’s say, even a computer can do it. We use known and standard ontologies such as Dublin Core Terms, Schema.org and Friend Of A Friend to map metadata to linked data fields.

Choosing the right ontology

The meemoo metadata model – which is used for the newspapers from News of the Great War as well – can already be mapped to Dublin Core Terms (in short: dcterms) for instance. For this project, the data on the newspapers in our database was mainly (but not exclusively) structured in Schema.org. This ontology can depend on a large, enthusiastic community and is updated regularly. Moreover, Schema.org was developed by, among others, a number of large search engines, which has a positive effect on the ranking of the search results. The fact that it supports newspapers was another decisive factor to choose this ontology.

However, not all possible characteristics and relations have a corresponding term in Schema.org. For example, it is not possible to indicate the page number of a newspaper. This is in turn possible with the SIO-ontology (see http://semanticscience.org/resource/SIO_000787.rdf). That is why we’ve expanded the Schema.org-model with other ontologies. When a characteristic or relation can be written by both Schema.org and another, frequently used ontology, such as dcterms or Wikidata, we will use both. We maximize the chances of reuse when we identify a newspaper headline with ‘headline’ in Schema.org as well as ‘title’ in dcterms. That way we combine various ontologies to describe the newspapers as comprehensively as possible – with the crucial input and expertise from IDlab.

Publishing linked data on the website

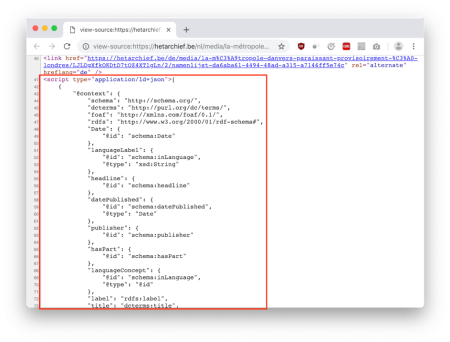

All of the characteristics and relations are now translated to linked data. What’s next? To publish linked data, you can choose to use a separate interface (for example a SPARQL endpoint or REST API) or datadump or simply enrich your own website. We prefer the last option. It’s easier to find the way when there’s only one address. Moreover, a separate interface requires additional maintenance, which entails the risk that your website might be more up-to-date than your interface. Users or developers can easily, almost automatically, examine the website, scrape and access datasets by using JSON-LD for the publication. Data on the website is readable both for humans (the website on display when you visit newsfromthegreatwar.be) and machines. Read our previous techblogs on this project to see the data – and links! – in question.

Licences for linked data

We have been talking about linked data and not about linked ‘open’ data. The reason being that the material is still (partly) in copyright. Each newspaper’s basic metadata is public domain and can indeed be used as linked open data. However, the newspapers – the scan of the newspapers and the text obtained through OCR – could be in copyright. You can find more information on the copyrights over here.

Consulting linked data

You can consult the newspapers, linked names and their metadata in various ways as structured data. Tools have already been developed for that. We elaborate on two of them.

1. Idfetch

This can be used to enlist the linked data triples (or RDF triples) for the given website. You will receive a fine list of all metadata related to that page and also all names identified from the List of Names. After that, you go to the next publication on the list via http://www.w3.org/ns/hydra/core#next until the list is completed. This command retrieves all metadata and writes it to the file hetarchief.ttl in Turtle format. This file can then be used to search through the RDF triples or to upload data to for example Comunica. Use the command line:

ldfetch;

https://hetarchief.be/nl/media/gazet-van-brussel-nieuwsblad-voor-het-vlaamsche-volk/A157MbRehXO7ISSJVLwBGV5g -p "http://www.w3.org/ns/hydra/core#next" > hetarchief.ttl

Don't see a video? Please check your cookie settings so we can show this content to you too.

Edit your cookie preferences hereCan’t see the video? Please check that your cookie settings allow us to show you this content. You can change your cookie settings at the bottom of this page. Click on ‘Change your consent’ and select ‘Preferences’ and ‘Statistics’.

2. Comunica

Comunica is a tool that enables you to easily pose a question across various websites and linked data interfaces, without having to figure out how to address and combine these various kinds of interfaces. You can find more information on the use of this platform (in English) on GitHub.

Now it's your turn

You can now get started with linked data from war papers on News of the Great War. This project started with the detection of entities, whilst investigating the reliability of certain links and the best ways to publish our structured data. It taught us a lot with the help of our partners IDlab and PACKED. Hopefully you also got something out of this process and the three techblogs that resulted from it. Do you have any questions? Ask us via support@meemoo.be.