Attestation: is it a good match?

- Tech Blog

In our previous tech blog, we explained how we looked for links between names in the List of Names and newspapers from the News from the Great War collection. Once we’d found them, we wanted to determine if we’d made the right matches and found the right person from the List of Names. Technically speaking: you can read here how we verify if entities from the two different databases actually correspond with each other.

Building up the corpus

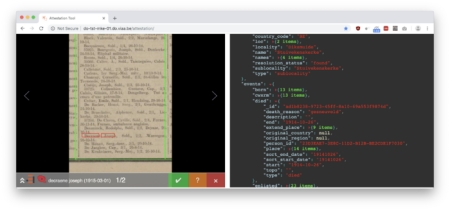

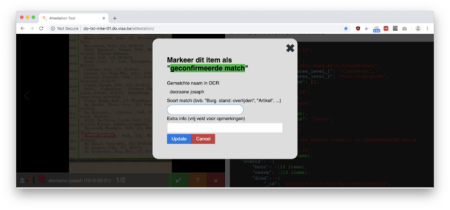

We want to be able to make a good estimate of whether the person in the two databases is the same person. This needs to be done automatically because there are over a million potential matches. So in order to draw up automatic rules or algorithms, and make as likely a link as possible between a person in the List of Names from In Flanders Fields Museum and a name in our newspaper database, we first built an attestation tool (the source code is available at GitHub). This makes it easy to manually determine if the person from the List of Names is the same person as the name found in the newspaper. We place all known relevant information next to the name in the newspaper for this.

Users can then classify the link as:

match

no match

possible match

This enabled us to build a small ‘corpus’ of 468 links that have been verified by hand, which we then use for further analysis and improvement of the automatic attestation algorithm.

Automatic attestation

It is of course impossible to check a million links manually, so we built a tool to verify the links automatically. This automatic attestation provides a reliability score for each match and is refined using an iterative process, which we explain in detail below. The reliability score is calculated during this process before being verified manually. We adapt the algorithm on the basis of the manual checking, do a new iteration with other items, and keep repeating this process to expand the corpus.

1. Determine reliability score

The reliability score is calculated on the basis of the fields available in the List of Names API.

These are: born place locality, died age, died place locality, enlisted regt0, died date, homeaddress, born date, died place name, enlisted number, enlisted regt1, born place name, enlisted rank, profession, memorated place locality, died place topo, memorated place name, victim type details, home address topo, memorated date, work place locality, enlisted sub, employer, school name, enlisted army, work company

Two questions that determine the reliability score are:

are any other parameters mentioned alongside the first name and surname?

how far away (in words) do these parameters appear from the name in the newspaper?

2. Analysis of calculated reliability scores

Every possible parameter that is found affects the final scores. High scores imply a high probability that it’s a match, and low scores are probably not a match. The scores are used to identify the cases that are most interesting for manual verification. We used the attestation tool to investigate how large or small the impact of each field was on the reliability score, and compared this with the reliability scores from the links that had already been classified manually.

3. Adjust reliability score calculation

It quickly became clear that there’s a possible different impact per field on the probability of a possible match. So stating a regiment number near a name is a better indicator than the city of birth being mentioned near a name. More specific data, such as a street name, has more of an impact than less specific data, such as a city. The algorithm for the calculation was refined on the basis of the analysis from the previous step.

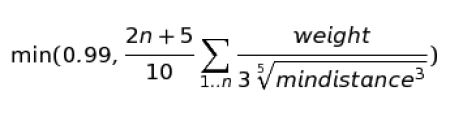

This process was run through many times and we ultimately accepted this formula for automatically calculating the reliability score:

The following points are important here:

a reliability score can be maximum 99%.

‘n’ stands for the number of extra data fields found; the more fields found, the higher the probability of a match.

Each data field has its own weight, which is determined in the iterative process. Finding a regiment number, for example, has a higher impact on the score and so is weighted more heavily than finding the age of the deceased.

‘mindistance’ is the minimum distance (in words) from the linked name to a data field (e.g. profession) that is found.

The formula has been developed in such a way that it matches the manual attestation as closely as possible. This score is however still based on a relatively limited data set of 468 links that have been verified by hand, and could certainly be refined with further iterations.

Result

The attestation cycle was run through several times for the links between the List of Names and the News from the Great War collection. This resulted in a reliability score per link which indicates how likely it is that the person in the List of Names corresponds with the person mentioned in the newspaper.

You can see the distribution of the impact per metadata field in the following image. (You can read more information about this method for presenting data – the box plot – here).

All links with a probability higher than 0% were published on News of the Great War. The link is published even if there’s just a 1% chance of a match. This means the user can see for themselves if the name in the newspaper corresponds with the one in the List of Names. Ultimately, 10.6% of all possible links were published (121,986 of the 1,153,881), with an indication of the reliability score. We will explain how we did this, and the method for looking up linked data, in a subsequent tech blog about linked data.